2 Notation and Definitions

2.1 Notation

Let’s start by revisiting the mathematical notation we all learned at school, but some likely forgot right after the prom.

2.1.1 Scalars, Vectors, and Sets

A scalar is a simple numerical value, like 15 or ≠3.25. Variables or constants that take scalar values are denoted by an italic letter, like x or a.

Figure 1: Three vectors visualized as directions and as points.

A vector is an ordered list of scalar values, called attributes. We denote a vector as a bold character, for example, x or w. Vectors can be visualized as arrows that point to some

directions as well as points in a multi-dimensional space. Illustrations of three two-dimensional vectors, a = [2, 3], b = [≠2, 5], and c = [1, 0] is given in fig. 1. We denote an attribute of a vector as an italic value with an index, like this: w(j) or x(j). The index j denotes a specific dimension of the vector, the position of an attribute in the list. For instance, in the vector a shown in red in fig. 1, a(1) = 2 and a(2) = 3. The notation x(j) should not be confused with the power operator, like this x2 (squared) or x3 (cubed). If we want to apply a power operator, say square, to an indexed attribute of a vector, we write like this: (x(j))2.

A variable can have two or more indices, like this: x(j) i or like this x(k) i,j . For example, in neural networks, we denote as x(j) l,u the input feature j of unit u in layer l.

2.2 Bayes’ Rule

The conditional probability Pr(X = x|Y = y) is the probability of the random variable X to have a specific value x given that another random variable Y has a specific value of y. The

Bayes’ Rule (also known as the Bayes’ Theorem).

2.3. Classification vs. Regression

Classification is a problem of automatically assigning a label to an unlabeled example. Spam detection is a famous example of classification. In machine learning, the classification problem is solved by a classification learning algorithm that takes a collection of labeled examples as inputs and produces a model that can take an unlabeled example as input and either directly output a label or output a number that can be used by the data analyst to deduce the label easily. An example of such a number is a probability.

In a classification problem, a label is a member of a finite set of classes. If the size of the set of classes is two (“sick”/“healthy”, “spam”/“not_spam”), we talk about binary

classification (also called binomial in some books). Multiclass classification (also called multinomial) is a classification problem with three or more classes2. While some learning algorithms naturally allow for more than two classes, others are by nature binary classification algorithms. There are strategies allowing to turn a binary classification learning algorithm into a multiclass one. Regression is a problem of predicting a real-valued label (often called a target) given an

unlabeled example. Estimating house price valuation based on house features, such as area, the number of bedrooms, location and so on is a famous example of regression.

The regression problem is solved by a regression learning algorithm that takes a collection of labeled examples as inputs and produces a model that can take an unlabeled example as input and output a target.

2.4 Model-Based vs. Instance-Based Learning

Most supervised learning algorithms are model-based. We have already seen one such algorithm: SVM. Model-based learning algorithms use the training data to create a model

that has parameters learned from the training data. In SVM, the two parameters we saw were wú and bú. After the model was built, the training data can be discarded. Instance-based learning algorithms use the whole dataset as the model. One instance-based algorithm frequently used in practice is k-Nearest Neighbors (kNN). In classification, to predict a label for an input example the kNN algorithm looks at the close neighborhood of the input example in the space of feature vectors and outputs the label that it saw the most often in this close neighborhood.

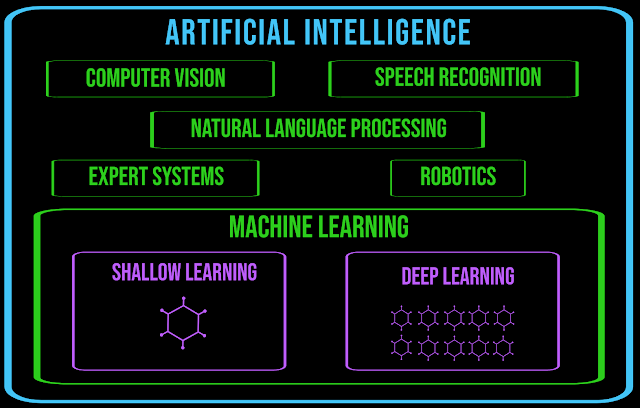

2.5 Shallow vs. Deep Learning

A shallow learning algorithm learns the parameters of the model directly from the features of the training examples. Most supervised learning algorithms are shallow. The notorious exceptions are neural network learning algorithms, specifically those that build neural networks with more than one layer between input and output. Such neural networks are called deep neural networks. In deep neural network learning (or, simply, deep learning), contrary to shallow learning, most model parameters are learned not directly from the features of the training examples, but from the outputs of the preceding layers.

5 Comments

Click here for CommentsNice explanation

ReplyGreat information

ReplyExcellent

ReplyNicely explained specially the difference between classification and regression

ReplyGood content..

ReplyVery interesting but..read more

ConversionConversion EmoticonEmoticon