1.1 what's Machine Learning

Machine Learning is a subfield of a technology that's involved with building algorithms that, to be helpful place confidence in a group of samples of some development. These examples will return from nature, be handcraft by humans or generated by another rule.

Machine Learning can even be outlined because the method of determination a sensible downside by

1. Gathering a dataset, and

2. Algorithmically building a applied mathematics model supported that dataset. That applied mathematics model is assumed to be used somehow to unravel the sensible downside.

To save keystrokes, i take advantage of the terms "learning" and "machine learning" interchangeably.

1.2 forms of Learning

In supervised learning1, the dataset is that the assortment of labelled examples {xi,yi}Ni=1.

Each part xi among N is named a feature vector. A feature vector may be a vector during which

each dimension j = one,...,D contains a worth that describes the instance somehow. That worth is named a feature and is denoted as x(j). as an example, if every example x in our assortment represents someone, then the primary feature, x(1), may contain height in cm, the second feature, x(2), may contain weight in kilo, x(3) may contain gender, and so on. For all examples within the dataset, the feature at position j within the feature vector continually contains an equivalent quite data. It means if x(2) i contains weight in kilo in some example xi,then x(2) k also will contain weight in kilo in each example xk, k = 1,...,N. The label Yi are often either a component happiness to a finite set of categories , or a true range, or a additional complicated structure, sort of a vector, a matrix, a tree, or a graph. Unless otherwise expressed, during this book Yi is either one in every of a finite set of categories or a true range. you'll be able to see a {category} as a category to that associate example belongs. as an example, if your examples square measure email messages and your downside is spam detection, then you have got 2 categories

The goal of a supervised learning rule is to use the dataset to provide a model that takes a feature vector x as input and outputs data that enables deducing the label for this feature vector. as an example, the model created victimisation the dataset of individuals may take as input a feature vector describing someone and output a likelihood that the person has cancer.

1.2.2 unattended Learning

In unattended learning, the dataset may be a assortment of untagged examples Ni=1.

Again, x may be a feature vector, and also the goal of associate unattended learning rule is to form a model that takes a feature vector x as input and either transforms it into another vector or into a worth which will be wont to solve a sensible downside. for instance,

in cluster, the model returns the id of the cluster for every feature vector within the dataset.

In spatiality reduction, the output of the model may be a feature vector that has fewer options than the input x; in outlier detection, the output may be a imaginary number that indicates however x is completely different from a “typical” example within the dataset.

1.2.3 Semi-Supervised Learning

In semi-supervised learning, the dataset contains each labelled and unlabelled examples.

Usually, the amount of unlabelled examples is far beyond the quantity of labelled examples. The goal of a semi-supervised learning algorithmic rule is that the same because the goal of the supervised learning algorithmic rule. The hope here is that victimization several unlabelled examples will facilitate the educational algorithmic rule to seek out (we may say “produce” or “compute”) a much better model a pair of.

1.2.4 Reinforcement Learning

Reinforcement learning may be a subfield of machine learning wherever the machine “lives” in Associate in Nursing setting and is capable of perceiving the state of that setting as a vector of options. The machine will execute actions in each state. totally {different|completely different} actions bring different rewards and will conjointly move the machine to a different state of the setting. The goal of a reinforcement learning algorithmic rule is to be told a policy. A policy may be a perform f (similar to the model in supervised learning) that takes the feature vector of a state as input Associate in Nursingd outputs an optimum action to execute therein state. The action is perfect if it maximizes the expected average reward.

1.3 however supervised Learning Works

In this section, I concisely make a case for however supervised learning works so you have got the image of the total method before we have a tendency to get into detail. i made a decision to use supervised learning as Associate in Nursing example as a result of it’s the sort of machine learning most often employed in follow.

The supervised learning method starts with gathering the information. the information for supervised learning may be a assortment of pairs (input, output). Input may well be something, for instance, email messages, pictures, or sensing element measurements. Outputs square measure sometimes real numbers, or labels (e.g. “spam”, “not_spam”, “cat”, “dog”, “mouse”, etc). In some cases, outputs square measure vectors (e.g., four coordinates of the parallelogram around someone on the picture), sequences (e.g. [“adjective”, “adjective”, “noun”] for the input “big stunning car”), or have another structure.

Let’s say the matter that you just need to unravel victimization supervised learning is spam detection.

You gather the information, for instance, 10,000 email messages, every with a label either “spam” or “not_spam” (you may add those labels manually or pay somebody to try and do that for us). Now, you have got to convert every email message into a feature vector.

The data analyst decides, supported their expertise, the way to convert a real-world entity, such

as Associate in Nursing email message, into a feature vector. One common thanks to convert a text into a feature

vector, known as bag of words, is to require a wordbook of English words (let’s say it contains

20,000 alphabetically sorted words) and stipulate that in our feature vector:

• the primary feature is capable one if the e-mail message contains the word “a”; otherwise,

this feature is 0;

• the second feature is capable one if the e-mail message contains the word “aaron”; otherwise,

this feature equals 0;

• ...

• the feature at position twenty,000 is capable one if the e-mail message contains the word

“zulu”; otherwise, this feature is capable zero.

You repeat the on top of procedure for each email message in our assortment, which provides

us 10,000 feature vectors (each vector having the spatial property of twenty,000) and a label

(“spam”/“not_spam”).

Now you have got a machine-readable computer file, however the output labels square measure still within the kind of human-readable text. Some learning algorithms need reworking labels into numbers.

For example, some algorithms need numbers like zero (to represent the label “not_spam”) and one (to represent the label “spam”). The algorithmic rule i exploit for example supervised learning is termed Support Vector Machine (SVM). This algorithmic rule needs that the positive label (in our case it’s “spam”) has the numeric worth of +1 (one), and therefore the negative label (“not_spam”) has the worth the worth (minus one).

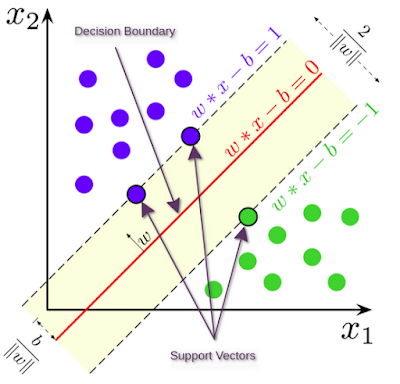

At now, you have got a dataset and a learning algorithmic rule, therefore you're able to apply the educational algorithmic rule to the dataset to induce the model. SVM sees each feature vector as some extent in an exceedingly high-dimensional space(in our case, spaceis 20,000-dimensional). The algorithmic rule puts all feature vectors on Associate in Nursing fanciful twenty,000- dimensional plot Associate in Nursingd attracts an fanciful twenty,000-dimensional line (a hyperplane) that separates examples with positive labels from examples with negative labels. In machine learning, the boundary separating the samples of dieffrent categories is termed the choice boundary. The equation of the hyperplane is given by 2 parameters, a real-valued vector w of a similar spatial property as our input feature vector x, and a true variety b like this:

wx ≠ b = 0,

where the expression wx means that w(1)x(1) + w(2)x(2) + ... + w(D)

x(D), and D is that the variety of dimensions of the feature vector x.

(If some equations aren’t clear to you immediately, in Chapter a pair of we have a tendency to come back the mathematics and applied mathematics ideas necessary to grasp them. For the instant, {try to|attempt to|try Associate in Nursingd} get an intuition of what’s happening here. It all becomes additional clear when you scan successive chapter.)

Now, the expected label for a few input feature vector x is given like this:

y = sign(wx ≠ b),

where sign may be a mathematical operator that takes any worth as input and returns +1 if the

input may be a positive variety or ≠1 if the input may be a negative variety.

is 20,000-dimensional). The algorithmic rule puts all feature vectors on Associate in Nursing fanciful twenty,000- dimensional plot Associate in Nursingd attracts an fanciful twenty,000-dimensional line (a hyperplane) that separates examples with positive labels from examples with negative labels. In machine learning, the boundary separating the samples of different categories is termed the choice boundary.

The equation of the hyperplane is given by 2 parameters, a real-valued vector w of the

same spatial property as our input feature vector x, and a true variety b like this:

wx ≠ b = 0, where the expression wx means that w(1)x(1) + w(2)x(2) + ... + w(D) x(D), and D is that the variety of dimensions of the feature vector x.(If some equations aren’t clear to you immediately, in Chapter a pair of we have a tendency to come back the mathematics and

statistical ideas necessary to grasp them. For the instant, {try to|attempt to|try Associate in Nursingd} get an intuition of what’s happening here. It all becomes additional clear when you scan successive chapter.)

Now, the expected label for a few input feature vector x is given like this:

y = sign(wx ≠ b),

where sign may be a mathematical operator that takes any worth as input and returns +1 if the input may be a positive variety or ≠1 if the input may be a negative variety.

We would conjointly like that the hyperplane separates positive examples from negative ones with the most important margin. The margin is that the distance between the nearest samples of 2 categories, as outlined by the choice boundary. an outsized margin contributes to a much better generalization, that's however well the model can classify new examples within the future. to realize that, we'd like to reduce the euclidian norm of w denoted by ÎwÎ .

So, the optimisation drawback that we wish the machine to unravel feels like this:

Minimize ÎwÎ subject to yi(wxi ≠ b) Ø one for i = one, ..., N. The expression yi(wxi ≠ b) Ø one is simply a compact thanks to write the on top of 2 constraints.

The solution of this optimisation drawback, given by Wu dialect and bú, is termed the applied mathematics

model, or, simply, the model. the method of building the model is termed coaching.

For two-dimensional feature vectors, the matter and therefore the answer is unreal as shown

in fig. 1. The blue and orange circles represent, severally, positive and negative examples,

and the line given by wx ≠ b = zero is that the call boundary.

Why, by minimizing the norm of w, will we notice the best margin between the 2 classes?

Geometrically, the equations wx ≠ b = one and wx ≠ b = ≠1 outline 2 parallel hyperplanes, as you see in fig. 1. the gap between these hyperplanes is given by a pair of ÎwÎ , that the smallerthe norm ÎwÎ, the larger the gap between these 2 hyperplanes.

That’s however Support Vector Machines work. This explicit version of the algorithmic rule builds the supposed linear model. It’s known as linear as a result of the choice boundary may be a line (or a plane, or a hyperplane). SVM also can incorporate kernels which will create the choice boundary haphazardly non-linear. In some cases, it may well be not possible to dead separate the 2 teams of points attributable to noise within the knowledge, errors of labeling, or outliers (examples terribly completely different from a “typical” example within the dataset). Another version of SVM also can incorporate a penalty hyperparameter for misclassification of coaching samples of specific

classes.

At now, you must retain the following: any classification learning algorithmic rule that

builds a model implicitly or expressly creates a call boundary. the choice boundary

can be straight, or curved, or it will have a fancy type, or it is a superposition of

some geometrical figures. the shape of the choice boundary determines the accuracy of

the model (that is that the magnitude relation of examples whose labels square measure expected correctly). the shape of the decision boundary, the method it's algorithmically or mathematically computed supported

the coaching knowledge, differentiates one learning algorithmic rule from another.

In follow, there square measure 2 different essential differentiators of learning algorithms to consider:

speed of model building and prediction time interval. In several sensible cases, you would prefer a learning algorithmic rule that builds a less correct model quick. to boot, you might prefer a less correct model that's a lot of faster at creating predictions.

1.4 Why the Model Works on New knowledge

Why may be a machine-learned model capable of predicting properly the labels of recent, antecedently unseen examples? to grasp that, investigate the plot in fig. 1. If 2 categories square measure divisible from each other by a call boundary, then, obviously, examples that belong to every category square measure placed in 2 completely different subspaces that the choice boundary creates.

If the examples used for coaching were designated every which way, severally of 1 another, and following a similar procedure, then, statistically, it's additional possible that the new negative example are going to be placed on the plot somewhere not too off from different negative examples. a similar issues the new positive example: it'll possible return from the environment of different positive examples. In such a case, our call boundary can still, with high likelihood,

separate well new positive and negative examples from each other. For other, less possible things, our model can create errors, however as a result of such things square measure less possible, the quantity of errors can possible be smaller than the quantity of correct predictions.

Intuitively, the larger is that the set of coaching examples, the additional unlikely that the new examples are going to be dissimilar to (and lie on the plot way from) the examples used for coaching. to reduce the likelihood of constructing errors on new examples, the SVM algorithmic rule, by trying to find the

largest margin, expressly tries to draw the choice boundary in such how that it lies as way as doable from samples of each categories.

5 Comments

Click here for Commentsvery good content and i really liked it while reading...

ReplyYou may also visit my blog by clicking below..

who will cry when you die

Informative 👍

ReplyThanks For Sharing About What is Machine Learning And I impressed By your post. If Any One Need Ml Certificate Then Check Best Machine Learning Certification

ReplyBest Machine Learning Certification https://mlcertific.com

ReplyThanks for the information. This is very nice blog. Keep posting these kind of posts. All the best.

ReplyMachine learning is enabling industrial shifts for increased efficiency. In 2021, the following industries are predicted to adopt machine learning.

In 2021, Machine Learning Is Set To Transform These 5 Industries/Machine Learning, Analytics Insight, Machine Learning in Business, Machine Learning Trends

ConversionConversion EmoticonEmoticon